360MonoDepth: High-Resolution 360° Monocular Depth Estimation

CVPR 2022

University of Bath

University of Bath

University of Bath

Abstract

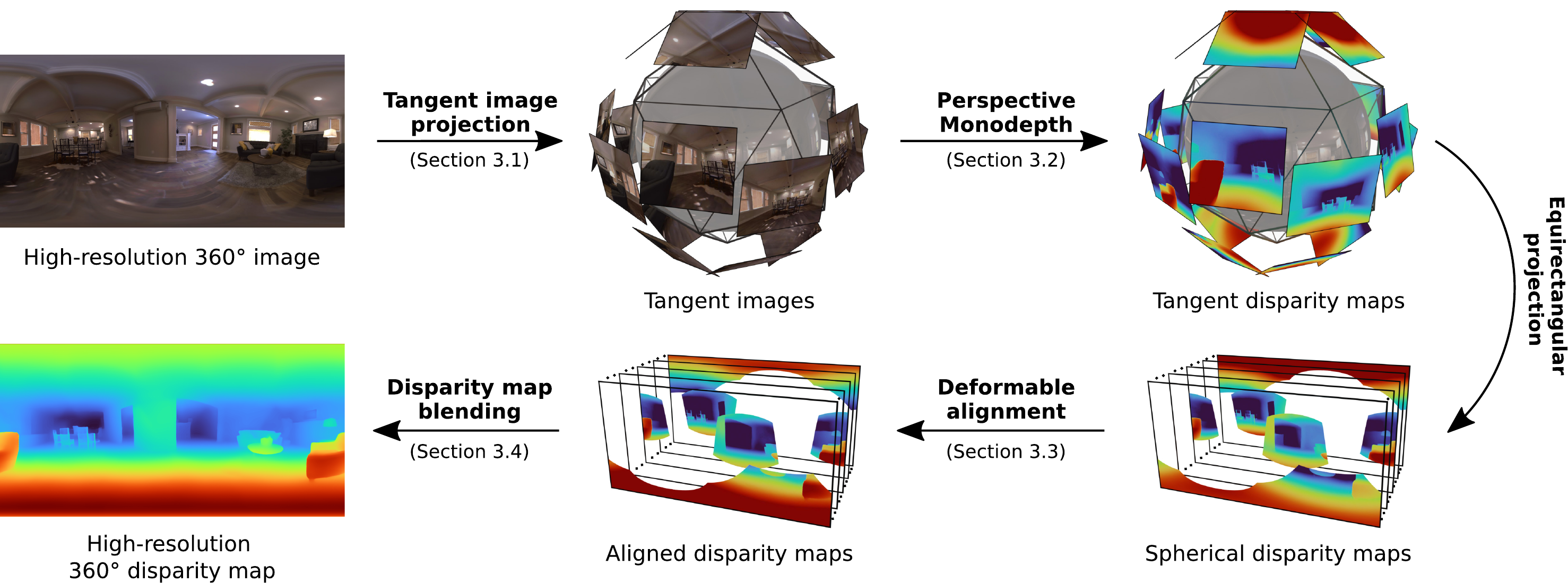

360° cameras can capture complete environments in a single shot, which makes 360° imagery alluring in many computer vision tasks. However, monocular depth estimation remains a challenge for 360° data, particularly for high resolutions like 2K (2048×1024) and beyond that are important for novel-view synthesis and virtual reality applications. Current CNN-based methods do not support such high resolutions due to limited GPU memory. In this work, we propose a flexible framework for monocular depth estimation from high- resolution 360° images using tangent images. We project the 360° input image onto a set of tangent planes that produce perspective views, which are suitable for the latest, most accurate state-of-the-art perspective monocular depth estimators. To achieve globally consistent disparity estimates, we recombine the individual depth estimates using deformable multi-scale alignment followed by gradient-domain blending. The result is a dense, high-resolution 360° depth map with a high level of detail, also for outdoor scenes which are not supported by existing methods.

Data

-

Matterport3D 360º RGBD Dataset: consists of 9684 RGB-D pairs from the original

Matterport3D. The resolution is 2048x1024.

All the details and use instructions are located at this

README.md - Replica 360º 2k/4k RGBD: consists of 130 RGB-D pairs render at 2048x1024 and 4096x2048.

Video

Citation

@inproceedings{reyarea2021360monodepth,

title={{360MonoDepth}: High-Resolution 360{\deg} Monocular Depth Estimation},

author={Manuel Rey-Area and Mingze Yuan and Christian Richardt},

booktitle={CVPR},

year={2022}}